Yun Chen

Ph.D. Candidate / Researcher

About Me

I am a Ph.D. candidate at the University of Toronto advised by Prof. Raquel Urtasun, and a Senior Researcher at waabi.ai.

My research interests lie at the intersection of computer vision, neural rendering, and autonomous driving. I am passionate about finding simple, elegant solutions to challenging problems.

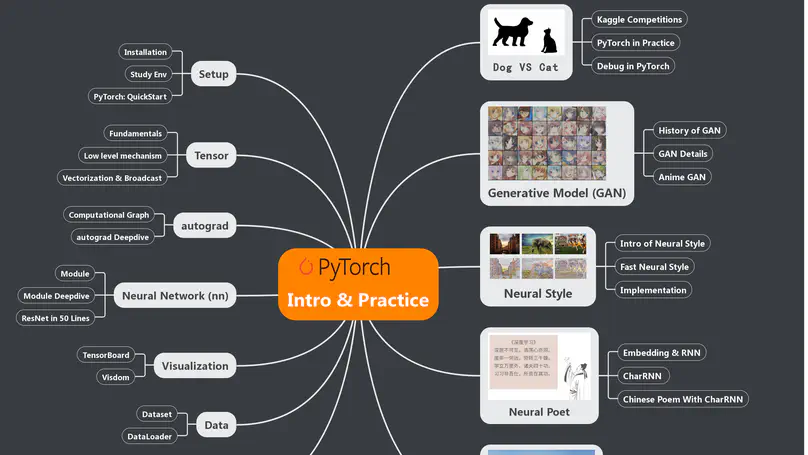

Beyond academia, I am an advocate of Unix philosophy, a Linux enthusiast, and the author of a bestselling PyTorch book in Chinese.

There should be one – and preferably only one – obvious way to do it. Although that way may not be obvious at first.

Research

Experience

Working closely with Prof. Raquel Urtasun and Prof. Shenlong Wang on 3D simulation.

- 3D Reconstruction with large-scale weakly-labelled data in the wild

- Photorealistic Image Simulation with Geometry-Aware Composition for Self-Driving

Working closely with Ming Liang and Bin Yang in ATG R&D for 3D Perception tasks.

- Depth Completion: Densify LiDAR with image guidance, SOTA in KITTI

- 3D Perception: 3D detection, tracking with multi-sensor. New SOTA in KITTI

- Map structure learning with graph neural network. New SOTA in Argoverse motion forecasting

Working on Medical Imaging in Machine Intelligence Group led by Dr. Xian-Sheng Hua

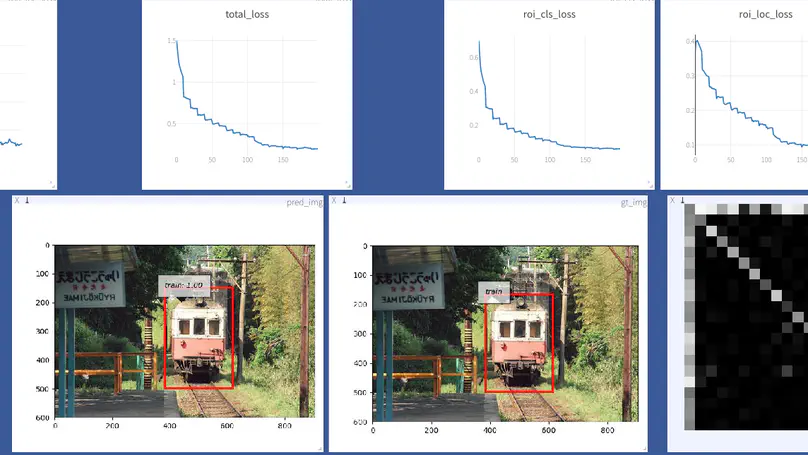

- Developed 3D R-CNN for CT/MRI, faster and more accurate than radiologist.

- Explored weakly-supervised learning with limited labels and active learning for efficient labelling.